Dr. Jordan Green, director of the Speech and Feeding Disorders Lab, is leading a study with technology firm Modality that could have significant impact on patient care, accuracy of diagnoses, and the efficacy of ALS medication.

Can a digital health app be used to help diagnose ALS, track its progression, and determine whether the medicine used to treat the disease is even effective?

The National Institutes of Health is about to find out. It has awarded a $2.3 million grant to Modality.AI and MGH Institute of Health Professions to determine if data collected from an app is as effective, or more effective, than the observations of clinical experts who diagnose and treat amyotrophic lateral sclerosis (ALS).

The use of artificial intelligence (AI) could have a profound impact on improving ALS diagnosis and decreasing costs for patients and healthcare providers while eliminating the need for patients to travel to clinics for assessment.

“This could be a game-changer for tracking ALS and understanding the impact of the medicines used to treat it,” said Dr. Jordan Green, the grant’s principal investigator and Director of the Speech and Feeding Disorders Lab at MGH Institute of Health Professions. “I think if we're successful, it'll change the standard clinical care practice. Our work is mostly face-to-face, often expensive and can be less attainable for the socially and economically disadvantaged and those who can’t travel or have mobility issues. With this app, we’ll be able to capture more data and in turn, help more people. It will be cheaper, faster – and we’ll get more accurate assessments.”

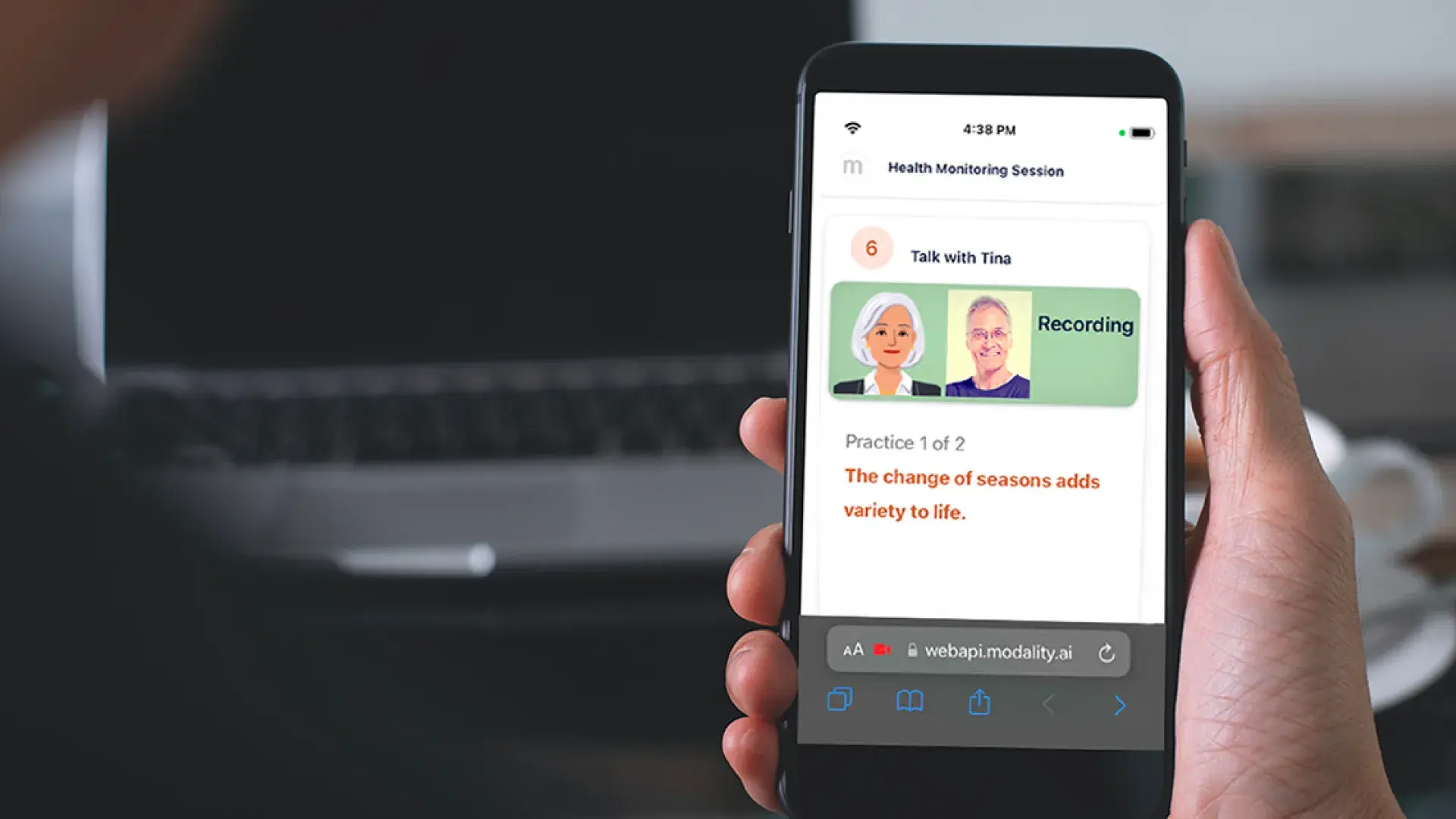

The app developed by Modality, a San Francisco digital startup, features a virtual agent, Tina, who guides patients through the assessment. Tina interviews patients in a way very similar to a clinician, gathering their speech and facial behaviors and using AI to measure and analyze the data. Using a virtual agent is a cutting-edge method of obtaining speech data information while engaging patients in an immersive user experience.

The advantages of the app are five-fold:

- Disease-related changes can be detected earlier and with more precision, which will facilitate a faster diagnosis along with monitoring the disease’s progression

- Testing of new drugs used to reduce the symptoms and advancement of the disease can be improved

- Automated assessments can be “self-driven” and administered remotely; a patient receives a standard and consistent instruction set and can do so from home

- Decreases burden on provider time because the test is administered without a clinician

- Offers non-judgmental interaction, which can be helpful for individuals with comorbid mental health challenges

The demand for accurate, low-cost, and remote speech assessments is surging from multiple sectors including health care providers, pharmaceutical companies, and academic institutions. The wide appeal of using speech as a diagnostic marker is, in part, its accessibility to computer analyses and, more importantly, that changes in speech are associated with a large number of psychiatric and neurological conditions. Yet, few commercial digital speech monitoring tools have been developed. This gap can now be addressed due to the ubiquity of personal devices and the recent emergence of new camera sensors and automated speech analytics. Developing a solution, however, will require input from investigators with diverse backgrounds, including speech-language pathology, mobile device architecture, software privacy and security, speech analytics, and artificial intelligence.

“From the beginning, we realized that merely monitoring audio was inadequate to the task so we developed a software platform to assess facial expressions, limb movements, and even cognitive functions,” said Dr. David Suendermann-Oeft, CEO of Modality. “Our aim is to make these complex assessments easy to use, accessible, equitable, and accurate for patients, clinicians, and researchers.”

The potential implications of this groundbreaking app include:

- Reducing misdiagnosed and delayed diagnoses

- Increasing accuracy and accessibility, while reducing the cost of clinical care

- Improving clinical trials of new experimental drugs

The three-year grant, which began in June 2022, is funded by the National Institute on Deafness and Other Communication Disorders, an agency of the National Institutes of Health. Phase I will take one year and Phase II will occur during years two and three. The goal of NIH’s Small Business Technology Transfer (STTR) grants program is to facilitate the commercialization of innovations from federally supported research and development. The academic-industry partnerships are intended to bring innovation out of the lab and into the world and, thereby, improve healthcare delivery while spurring economic growth.

“I jumped at the opportunity to work with Modality,” said Dr. Green. “The members of the team have unique and extensive histories of developing AI speech applications and commercial interest in implementing this technology into mainstream health care and clinical trials. New technologies are particularly at risk of failing when they are not supported by a commercial entity.”

How it Works

Modality’s software-as-a-service web application can be used on internet connected smartphones, tablets, and computers. Not only can it measure the progression of ALS, but it is also geared toward helping diagnose the disease.

Using the application is as simple as clicking a link. The patient receives an email or text message indicating it is time to create a recording. Clicking a link activates the camera and microphone, and Tina, the AI virtual agent, begins giving instructions. The patient then is asked to count numbers, repeat sentences, and read a paragraph, for example. All the while the app is collecting data to measure variables from the video and audio signals, such as speed of lip and jaw movements, speaking rate, pitch variation, and pausing patterns.

Tina decodes information from both speech acoustics and speech movements, which are extracted automatically from full-face video recordings obtained during the assessment. Computer vision technologies – such as face tracking – provide a non-invasive way to accurately record and compute features from large amounts of data from facial movements during speech.

"Tina leads the user through standard assessments and takes measurements that allow the clinician to optimize their time and expertise," said Suendermann-Oeft. “The results are shown in a dashboard of speech analytics that can be measured across time to make assessments, for example, if the individual is responding to medication, if they need extra support, or to characterize their overall disease progression.”

Implications for Patient and Pharma and the Proof Point

It can take up to 18 months to get diagnosed with ALS, and by the time that diagnosis arrives, the patient has already lost motor neurons that are responsible for speech, swallowing, breathing, and walking. The benefits of drug therapies and other interventions will be maximized if they are administered upon initial diagnosis while motor neurons are still intact. Modality is helping meet the medical need for a diagnostic tool that helps identify ALS earlier, improving the effectiveness of therapeutics.

Researchers will compare results obtained through Modality’s platform to those from top-of-the-line equipment currently being used to track the progression of ALS. If the results match the results from clinicians and their state-of-the-art equipment, then researchers will know they have a valid approach.

“Our collaboration with MGH Institute of Health Professions will create a standardized assessment app for ALS, which can serve as a guiding example also for other neurological and psychiatric conditions,” said Suendermann-Oeft.